Navigation auf uzh.ch

Navigation auf uzh.ch

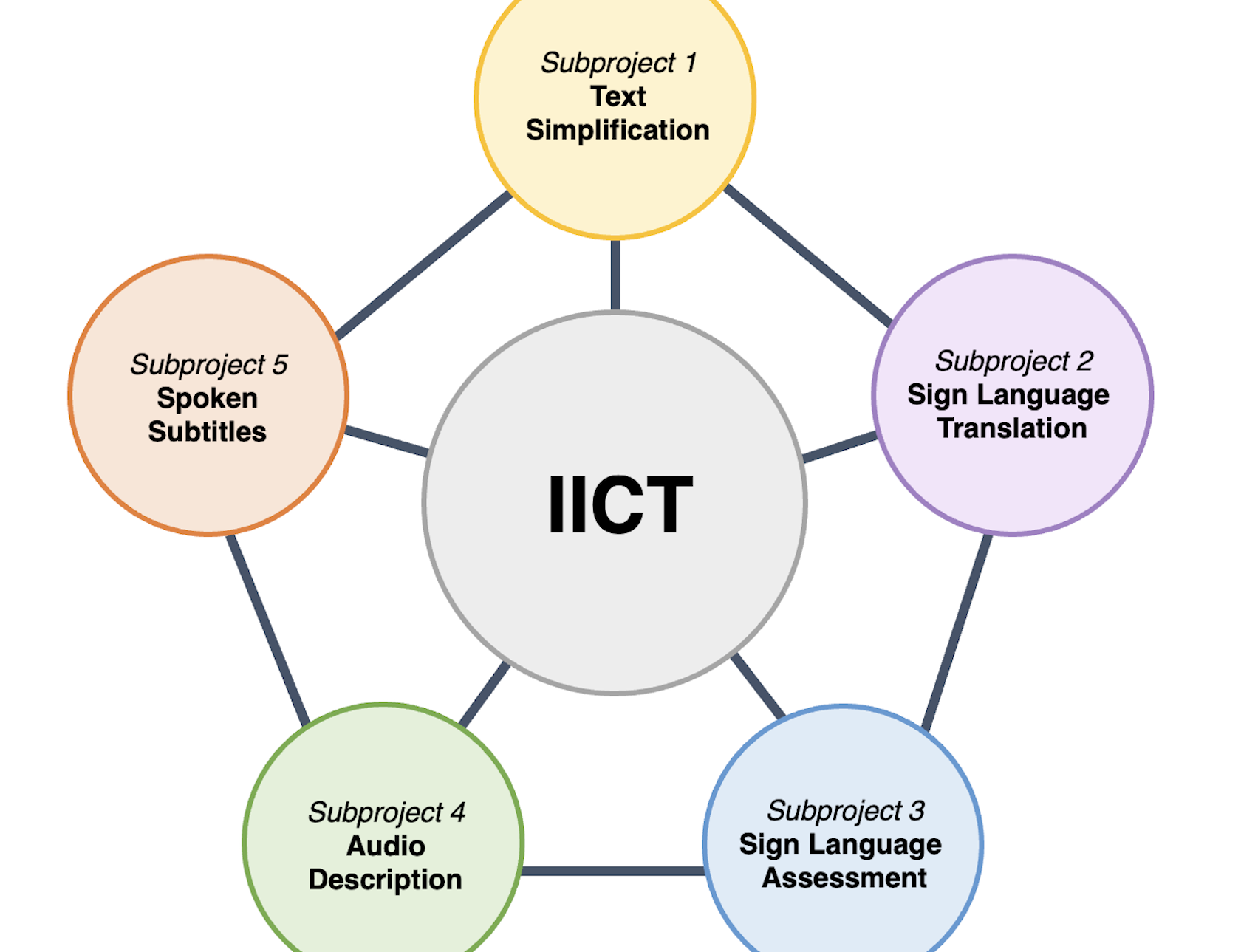

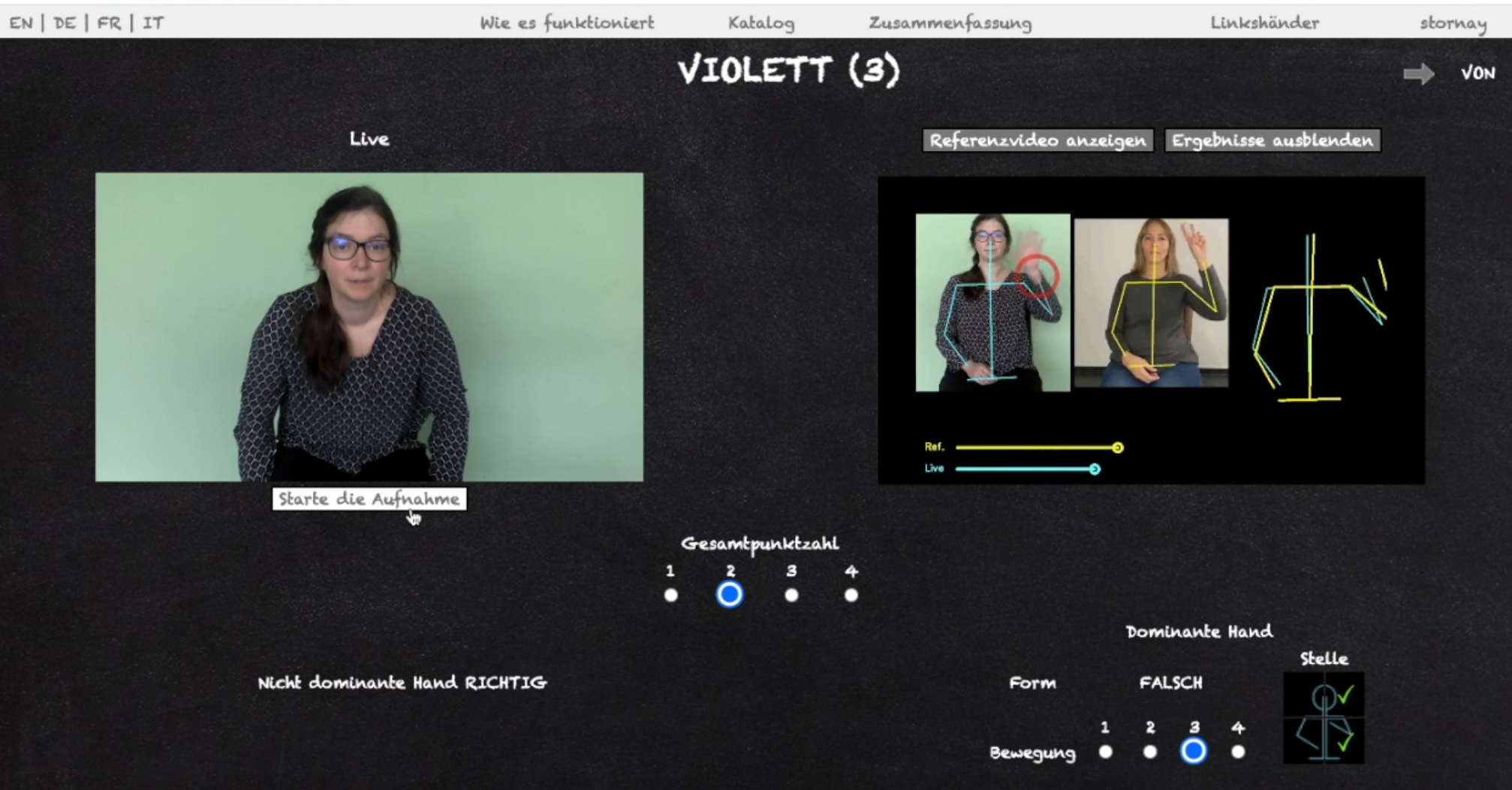

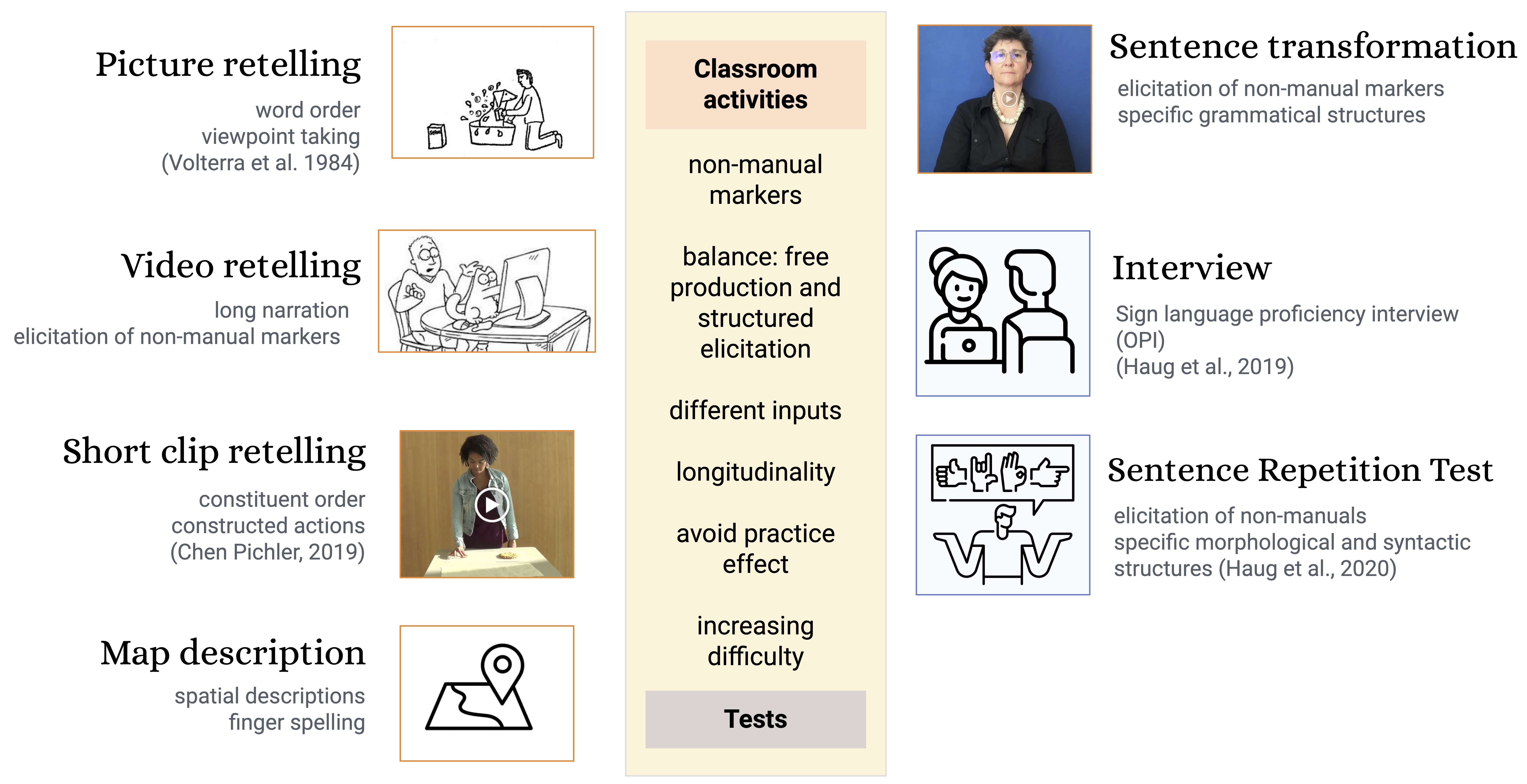

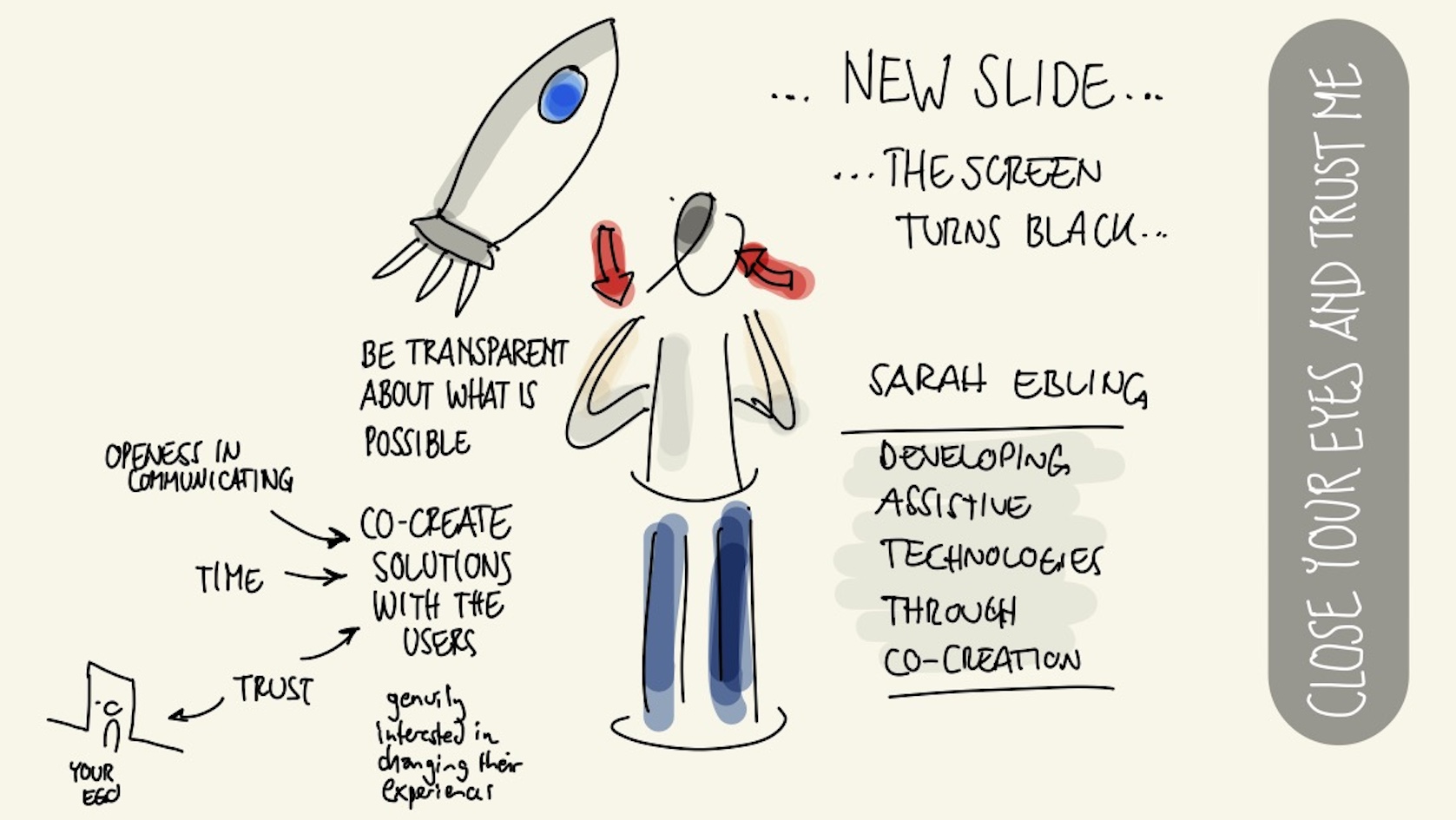

Our chair deals with language-based assistive technologies and digital accessibility. Our focus is on basic and application-oriented research.

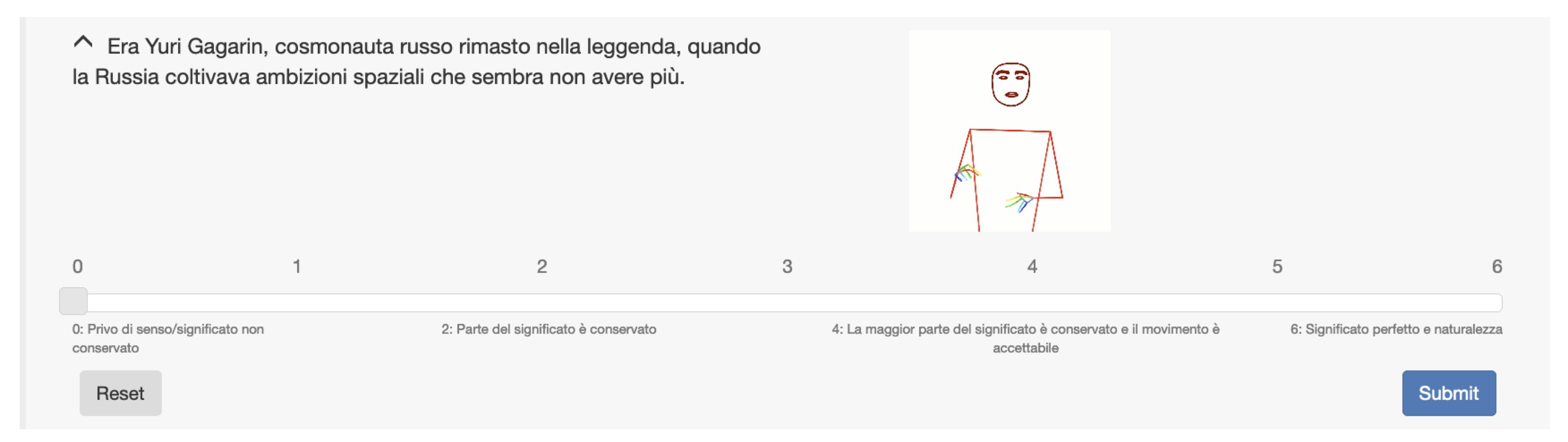

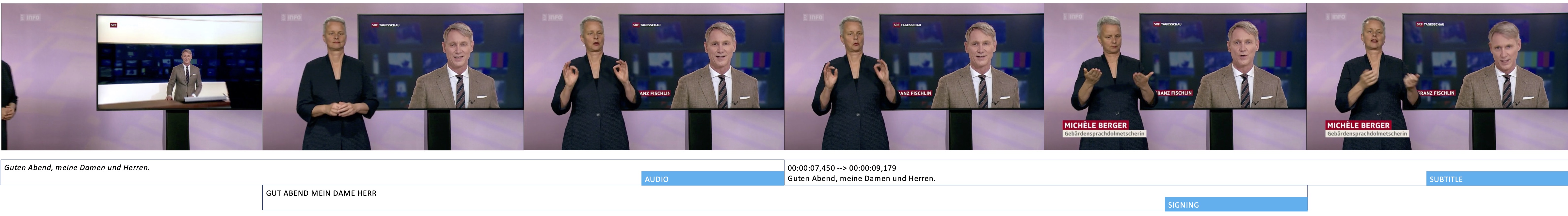

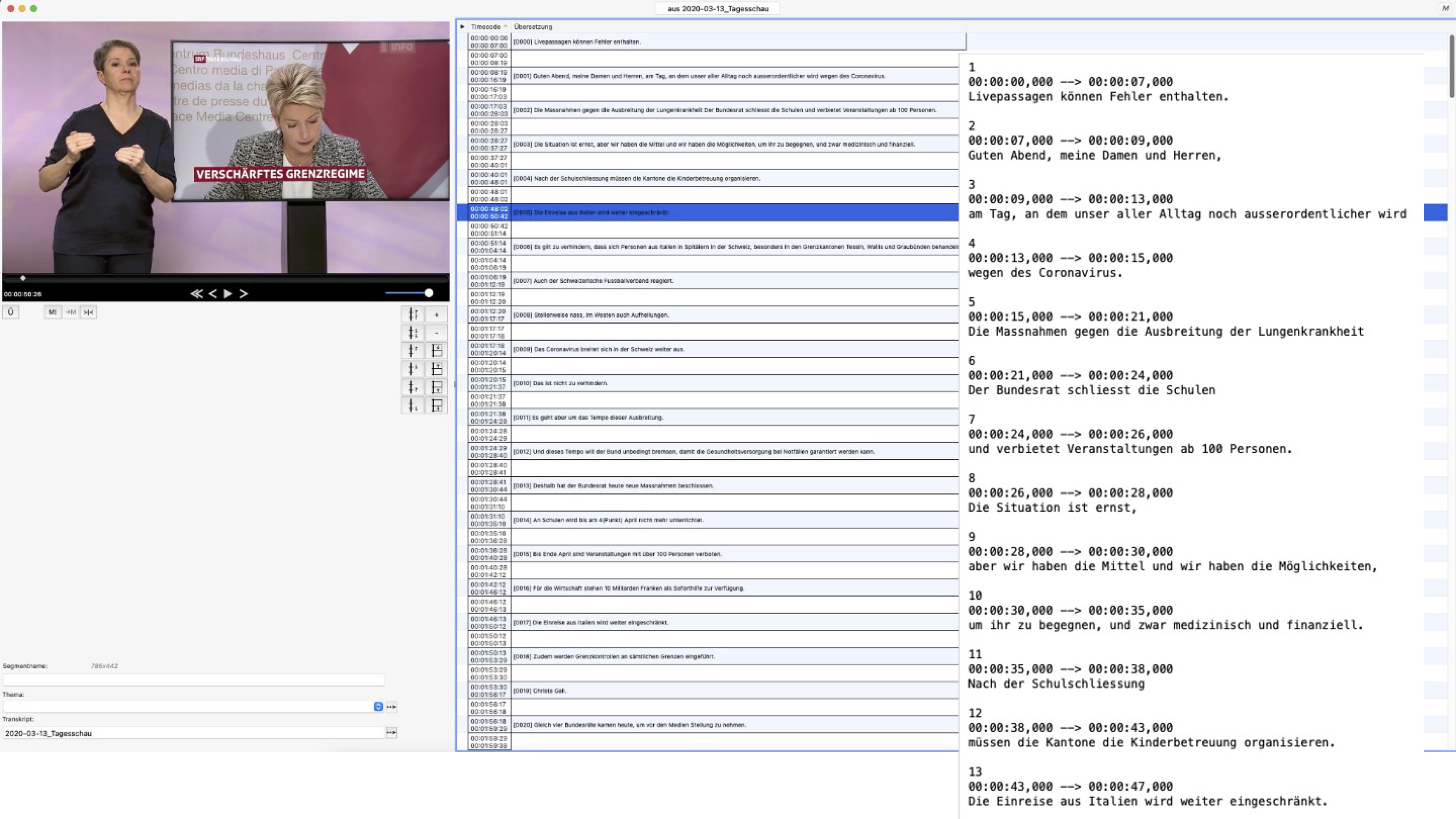

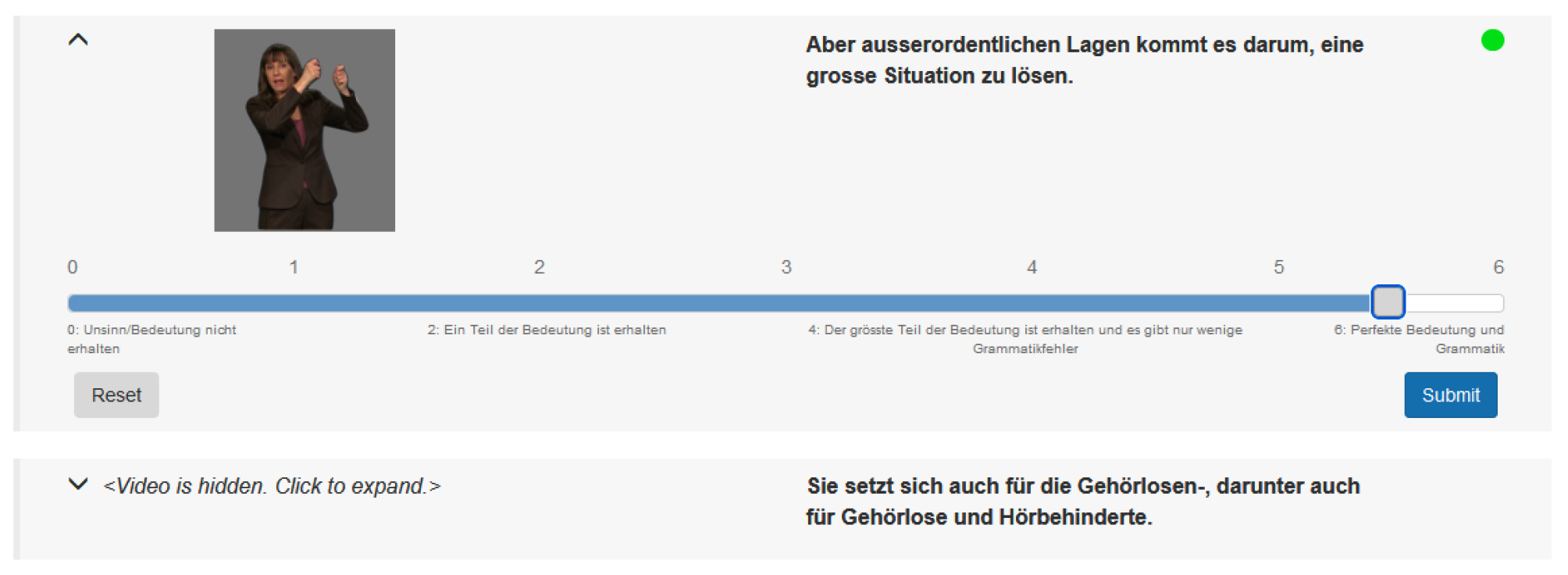

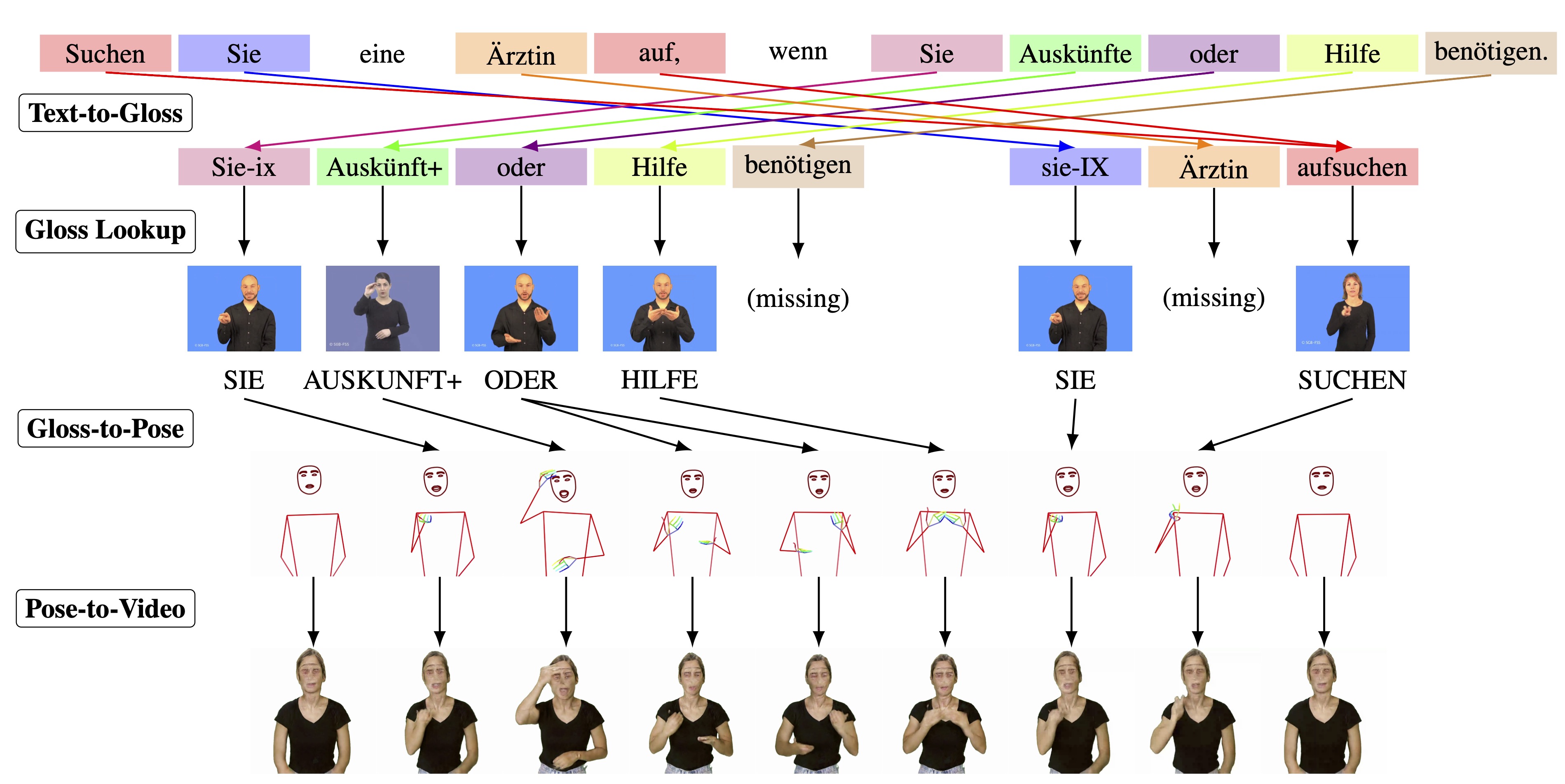

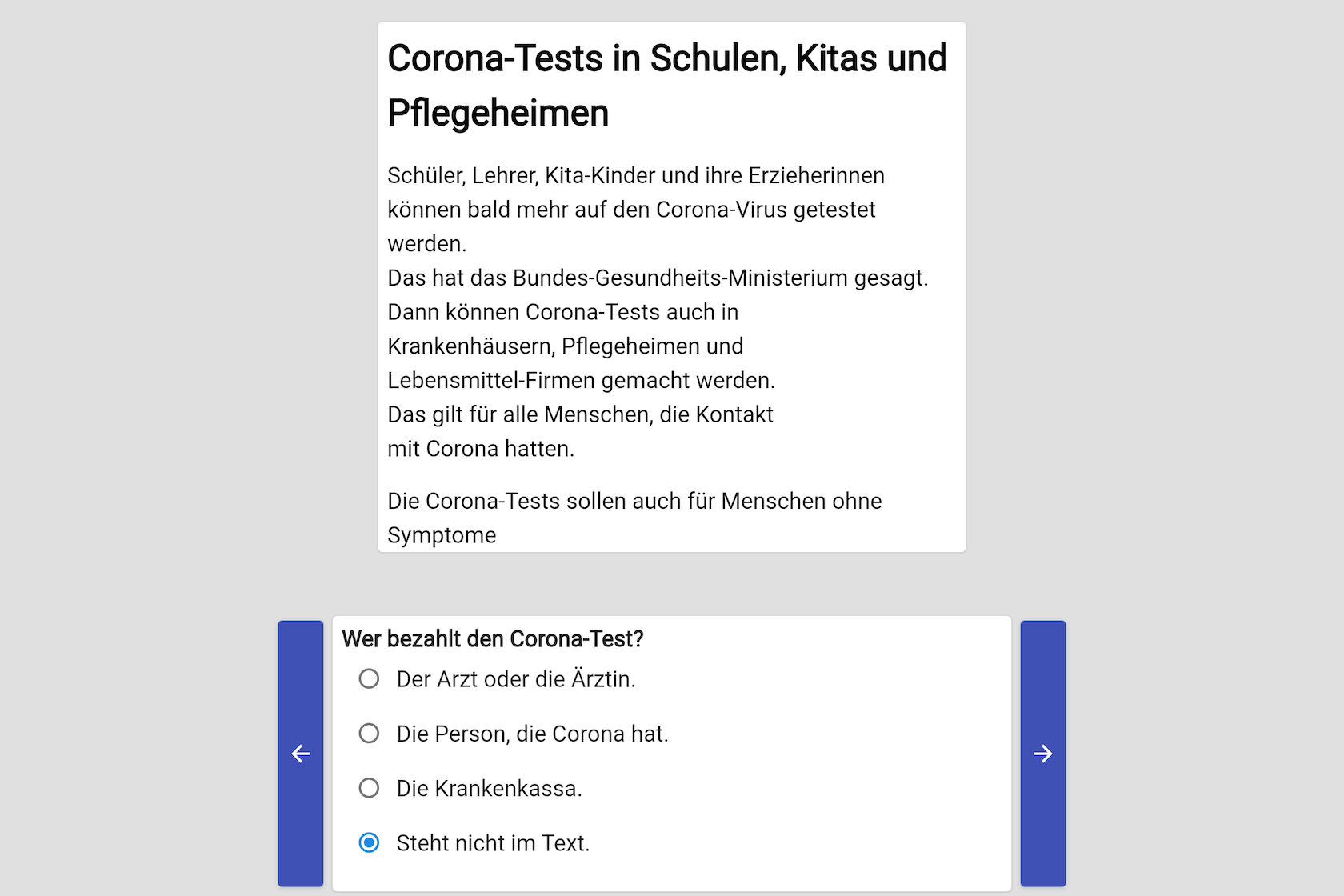

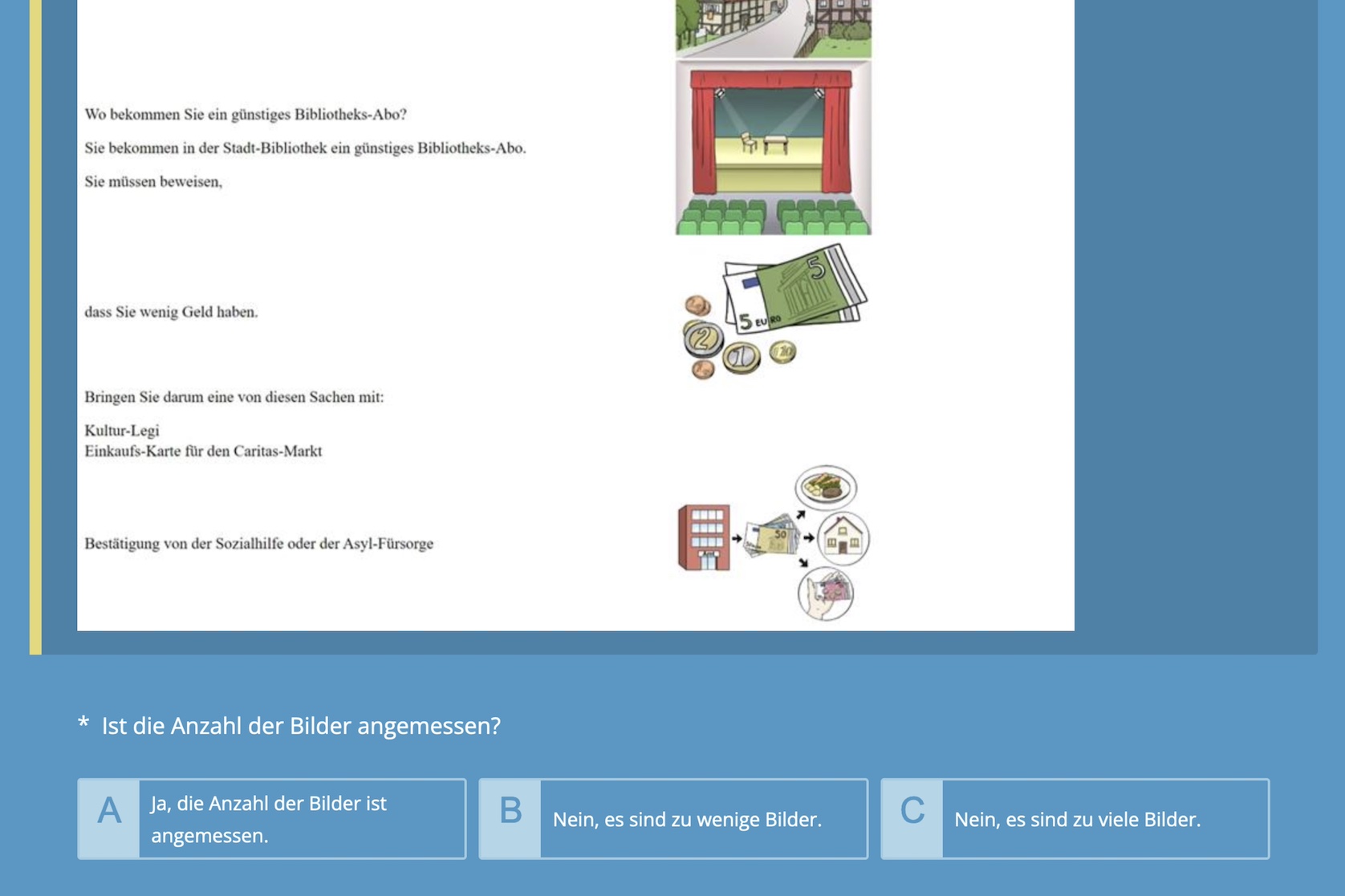

We subscribe to a broad definition of language and communication, in line with the UN Convention on the Rights of Persons with Disabilities (UN CRPD); as such, we deal with spoken language (text and speech), sign language, simplified language, Braille, pictographs, etc.

We combine language and communication with technology and accessibility in two ways:

Our technologies focus on the contexts of hearing impairments, visual impairments, cognitive impairments, and language disorders.

The group is headed by Prof. Dr. Sarah Ebling.